Will Tesla FSD decide the life and death of lidar in China in 2024?

In 2023, the 800V high-voltage system was popular for new energy vehicles, aiming at charging time and energy consumption performance; In 2024, new energy vehicles will be popular with lidar, popular high-speed NOA and urban NOA, who will fight for a stronger algorithm and a higher opening rate of high-order intelligent driving. So that in the end, it is possible to spell out who has stronger neural network and faster learning ability, and who can make better NOA effect.

At least those new brands that we are familiar with have made some breakthroughs in the field of intelligent driving, but at present, there are lidar fusion sensing solutions and pure visual sensing solutions for intelligent driving in the market. These two schemes constitute all of the current advanced intelligent driving. Moreover, the high-order intelligent driving function includes the city/high-speed NOA function.

Then, is the NOA scheme for cities without lidar suitable for 2024?

LOOKAR

Lidar will help you a lot.

If 2022 is the first year of laser radar mass production, and 2023 is the year when the loading capacity begins to take off, then 2024 can be said to be the first year of NOA (Urban Navigation Assistant Driving Function) landing. At present, companies in the industry, such as AITO, Huawei, Ideality and Tucki, have gradually opened their target cities, and the number of cities is more than one. The landing of NOA in cities has also brought about a derivative problem.

Lidar, is it important for the city NOA?

Before talking about this problem, we need to sort out what the functions of NOA in the city contain. In the early years, the addition of ACC adaptive cruise+lane keeping and other configurations made it easier for drivers to some extent, which was also the highest level of assisted driving function we could reach in that year.

On the other hand, now, the city NOA will realize the auxiliary driving functions from point A to point B according to the navigation guide, such as following the car, stopping, and merging; Turn left and right at the intersection, stop/travel according to the signal light; Automatic parking, parking and so on in the parking lot. To realize these functions, we need a series of powerful and accurate sensing hardware to improve it, besides the support of chips with high computing power.

The above is to make you understand that the situation faced by sensing hardware in urban NOA is very complicated and changeable. Compared with the working conditions of high-speed NOA, the difficulty faced by urban NOA is multiplied.

There are two kinds of re-sensing schemes at present. The first one mostly uses laser radar, but the second one, NOA scheme for cities with pure vision, is gradually increasing since the second half of 2023, such as Baidu Apollo City Driving Max, DJI Vehicle Platform -9V, Tesla FSD and Extreme Yue.

Talk about the underlying logic.

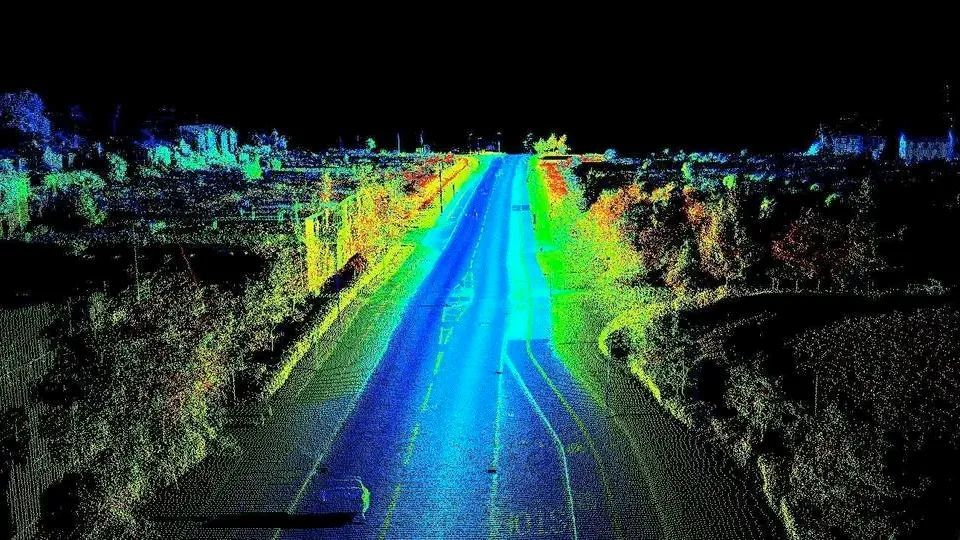

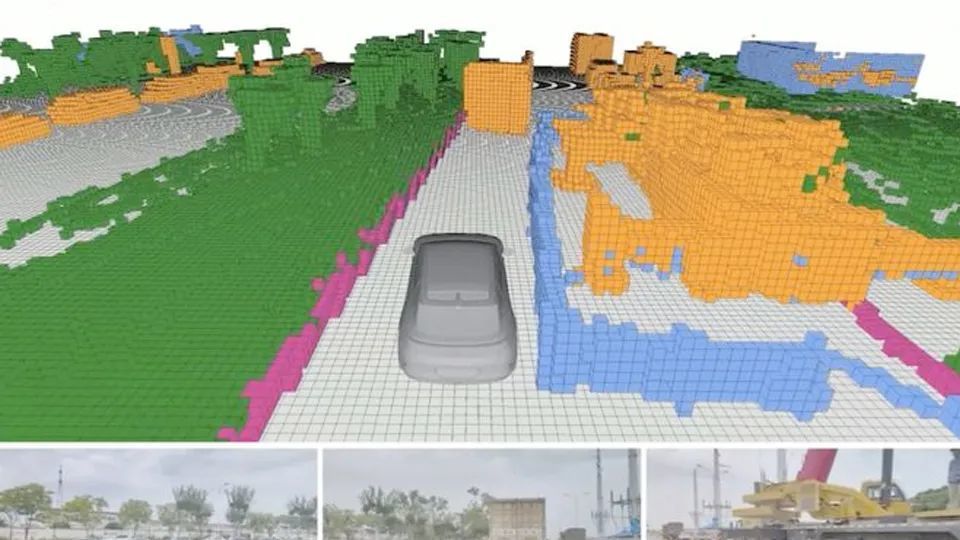

Visual perception is nothing more than using a camera as the main perception hardware, and the information it provides will have a large proportion of decision-making; The rest of the ultrasonic and millimeter wave radars have little sense of existence, but only assist the same existence in this system; Another core is the big model and learning ability behind it; Use the information collected by the camera to do 3D reconstruction, and then determine the object.

Lidar mainly relies on the point cloud of lidar to detect objects, and the speed of point cloud generation can be as high as hundreds of thousands of points per second, and the accuracy is the effect that it is difficult to achieve when the camera is converted into 3D modeling. If one laser radar is not enough for forward direction, it can also be used for 2 lateral and 1 backward direction, which can meet the detection coverage of 360 of the whole vehicle; Now, lidar also tends to be a big model, gradually abandoning the mode of high-precision map, which seems to be somewhat similar to the pure vision scheme, but the data accuracy that lidar can provide is far due to the pure vision scheme.

Don’t talk about the use of large-scale scenes, for example, in the quasi-L3 working condition of parking and berthing, the automatic parking function has very high accuracy for the data of surrounding objects. After adding the lateral lidar, the detection distance can be 0.05 meters. If we rely on visual scheme, lateral perception can only be supported by millimeter wave and ultrasonic radar.

LOOKAR

Visual scheme, isn’t it working?

Visual perception is cheap, but its disadvantages are more prominent, such as insufficient detection of depth information and the construction of three-dimensional space, which are shortcomings and long-standing problems. But now a big model has been derived to make up for these two shortcomings. The cooperation between Extreme Vietnam and Baidu is the first in China to use OccupyNetwork technology.

And this technology, Tesla is also using.

The general meaning is to learn the function space and use the three-dimensional reconstruction method to show the three-dimensional space, and use the visual material+algorithm to achieve the effect similar to the laser point cloud. The function of this visual perception scheme is uncertain. For example, a Tesla Model Y crashed into a white semi-trailer truck at an intersection in southwest Detroit, USA. According to the accident report, the car started the Autopilot mode at the time of the incident, but the system AI algorithm identified the white truck compartment parked on the road as the sky.

As a result, the vehicle directly crashed into it without any braking behavior. This is the disadvantage of the vision scheme. Compared with the vision scheme, the lidar is similar to the "tactile" perception, which can detect the material of an object hundreds of meters away, without the problem that the white vehicle can be recognized as the sky by visual judgment.

Let’s just say that even Tesla has failed to come up with a particularly mature pure vision scheme. At this stage, it is more about making the algorithm of pure vision scheme better. It indirectly shows that this road is not easy and difficult, and it is really inappropriate to take this road if it is not for low cost. However, after Tesla updated the version of FSD V12 and moved out of the learning ability of neural network, some shortcomings of the pure vision scheme were alleviated, so that it no longer relied on pure data, but had a strong learning ability. In similar scenes, it can be commanded according to the learned processing methods of human drivers. It is relatively smooth in the actual measurement of the American domestic market and occasionally needs human to take over. For the current L2-level intelligent assisted driving, the ability is sufficient, but for the L3-level assisted driving scene, it is not yet.

On the ability level, driving is basically realized. For example, the effect of high-speed NOA function can be similar to that of lidar, and it can be used well as long as it is conservative enough. However, under the NOA working condition in the city, we are still gnawing at the difficulties of some big scenes, such as the scene of vehicles parked on the side of the road. The visual scheme can’t identify whether the car is dynamic or not. If the car suddenly starts, the visual judgment may still be static and the sensitivity is low.

Judging from the current pure visual perception scheme, there are two development orientations.

First, it will get through the intelligent driving scheme of vehicles below 200,000 yuan with the advantage of cost performance, but it is not suitable for urban NOA function, and more supports the realization of high-speed NOA function and accelerates the landing of low-level intelligent driving. Second, it can meet the needs of urban NOA and high-speed NOA, but the algorithm strategy will tend to be more conservative, and the overall use comfort is not as good as that with lidar.

The choice of some enterprises in the industry is more inclined to the first scheme.

In 2024, lidar will become the brand that most brands just need to configure, especially the brand that uses ADS high-order assisted driving provided by Huawei. There are also companies with firm lidar like Weilai and Ideality. Although Xpeng Motors is still conservative in the use of lidar (with pure vision, but hesitant), the current scheme is still based on lidar.

Not to deny the energy of the visual scheme, but we have to face up to its performance limitations. It is ok to be a city NOA, but Tesla is constantly improving the function of the city NOA under pure visual perception to make it better. After that, it is necessary to consider the different road habits of different cities in China, which will be extremely huge and cumbersome "learning pressure" for visual programs that rely more on algorithms.

Ask a few questions:

1. Is pure vision necessary when lidar is shot down by major manufacturers?

2. After the lidar was cheap, Tesla turned. Can the existing pure visual fans follow?

3. Limitations of visual perception. Without a strong neural network, is it not as easy to use as lidar?

In addition, it is very likely that Tesla FSD will land in the China market this year, and it will be tested in succession in 2023. In the end, the use effect of FSD may ultimately determine the direction of NOA scheme in cities with pure vision and lidar. Tesla FSD V12 came to China to face the first problem, and it is not a difficult problem to adapt in the domestic scene. It will be well realized in the short term. After the adaptation, it is a question whether we can use the scheme in the American market to get through the domestic city NOA and make the same effect. Secondly, it is another question whether the opening rate of NOA in Tesla FSD can equal the new forces of the current lidar scheme.

This year, the pure vision scheme led by Tesla and the lidar scheme chosen by a number of new forces may give a clear choice before the advanced intelligent driving faces L3.

Author Luka Automobile